TimeBase streams vs TimeBase topics

January 2024

Overview

This article compares producer-to-consumer latency of TimeBase topics and streams for Ember algorithms.

Scope

In this experiment we will focus on scenario when market data producers and consumers are co-located on the same host. This will allow using IPC (shared memory) mode of TimeBase topics. Please note that even in this scenario durable carbon copy stream (written for each topic by TimeBase) enables market data to not-so-latency-critical remote clients as well.

Experiment

Ember's latency-mm benchmark was used in this experiment. This benchmark models market data reception pipeline of Deltix MarketMaker and similar setups. The benchmark uses Terraform and Ansible to configure on demand AWS environment to run experiment described below. The Ansible playbook has single flag use_topics that switches data pipeline to use topics instead of streams.

The simulation of market data producers was carried out using multiple instances of Ember's

FakeFeedAlgorithm, which is capable of generating a realistic Level 2 price feed.- A total of 25 instances of the

FakeFeedAlgorithmwere utilized to simulate 25 exchanges (n=25), each handling 10 trading contracts. - The combined market data rate from these simulations amounted to 50,000 messages per second.

- The maximum order book depth was configured to accommodate 50 bids and 50 asks.

- A total of 25 instances of the

- Market data consumption is managed by the

LatencyStatAlgorithm, a simplified version of the MarketMaker algorithm. This algorithm is designed to capture the producer-to-consumer latency of each individual market message, recording the data in an HDR Histogram. In our experiment, latency percentiles extracted from this histogram were recorded at five-second intervals, effectively representing statistics for the last 250,000 messages.

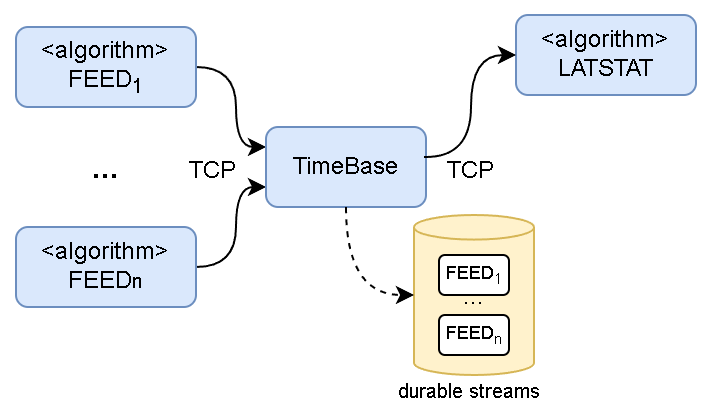

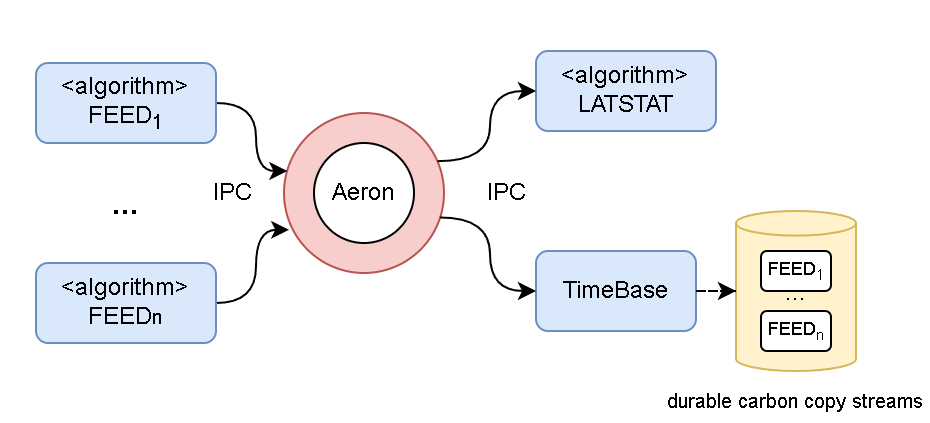

- Described components can be configured to either use TimeBase Streams (pictured above) or TimeBase Topics (shown below):

- Aeron Media driver was running standalone. Driver was configured to in SHARED thread mode.

- All topics were configured to run in IPC mode with default buffer size 16Mb. IPC mode assumes producer and consumer are co-located on the same host.

- In all experiments TimeBase streams used 5.0 storage format and had automatic purge processes running that retained only last 15 min of data.

- In Topics mode TimeBase was configured to write carbon copy of each topic into designated durable stream as well.

- TimeBase version 5.6.59 and Ember 1.14.71 were used in this test.

- Whole setup runs under docker compose.

Results

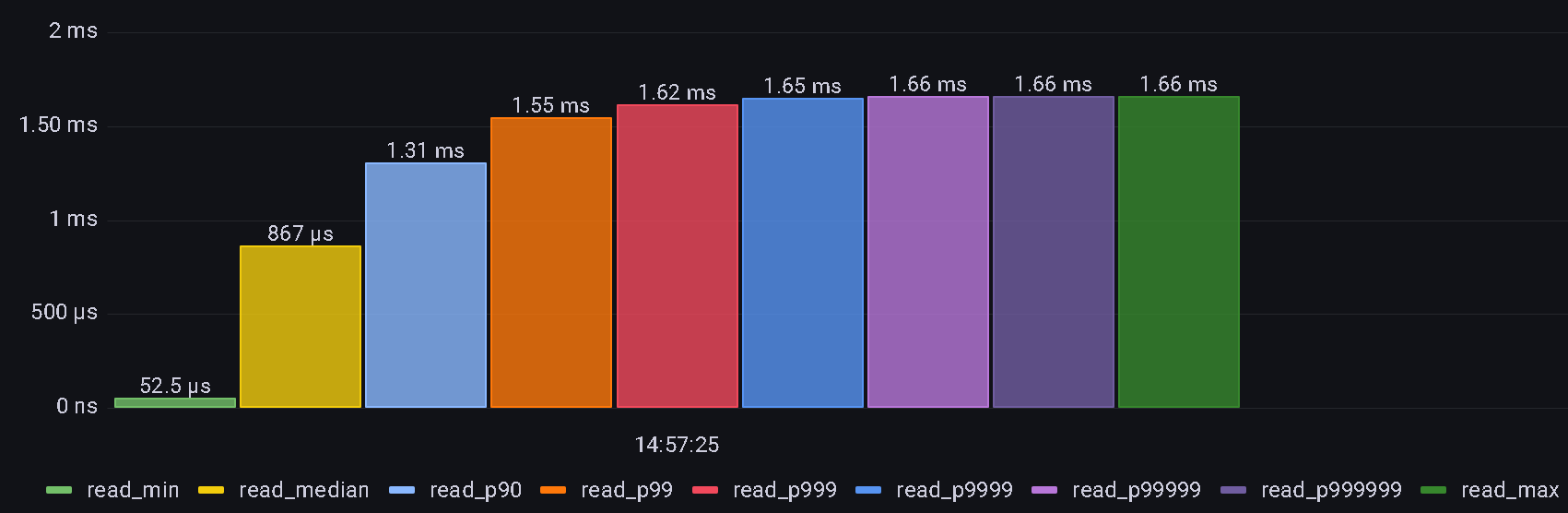

The following histogram shows typical observed latencies over random 5 seconds interval:

Streams:

Here we can see that median latency was 687 microseconds, while 99% of messages came under 1.550 milliseconds.

Here we can see that median latency was 687 microseconds, while 99% of messages came under 1.550 milliseconds.

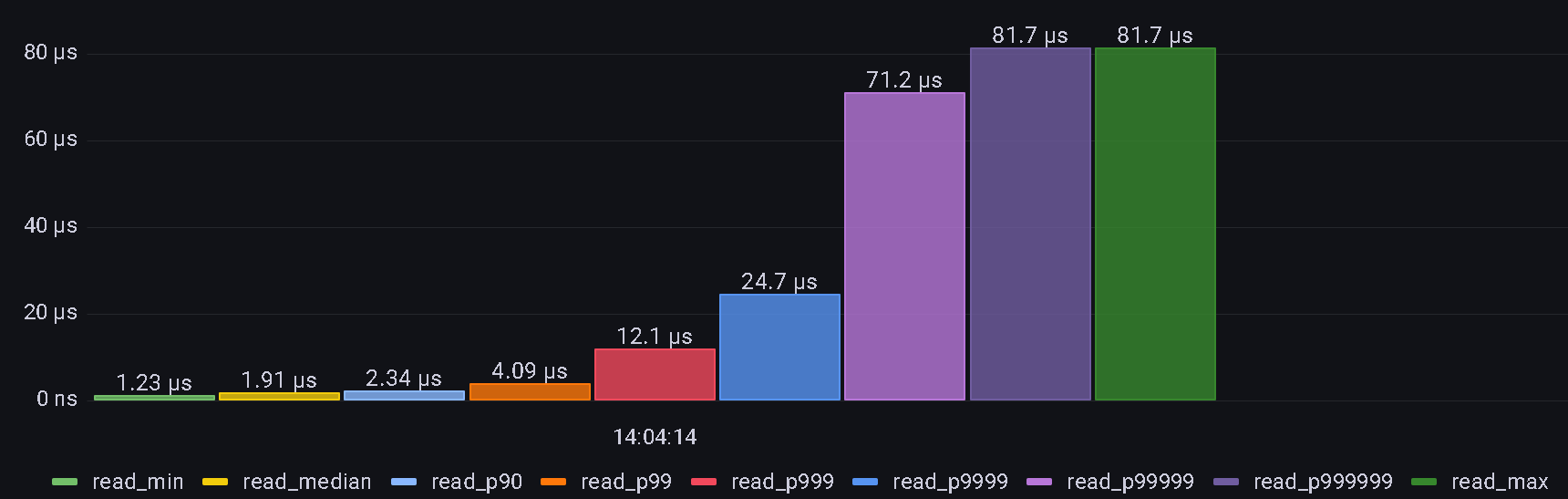

Topics:

Here we can see that median latency was 1.23 microseconds, while 99% of messages came under 4.09 microseconds.

Here we can see that median latency was 1.23 microseconds, while 99% of messages came under 4.09 microseconds.

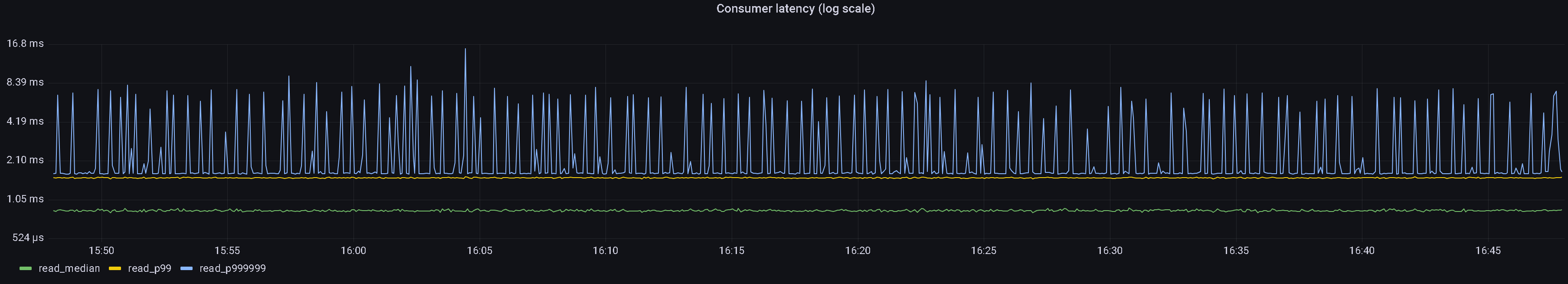

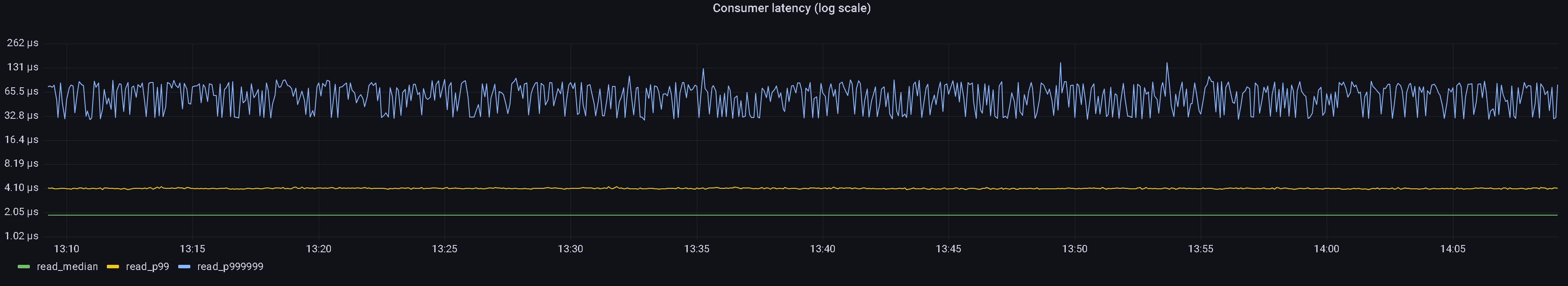

The next charts show P50, P99, and P999999 latency over 1-hour time window.

Streams:

Topics:

Summary

This article illustrates advantages of using TimeBase IPC topics for producer-consumer latency:

| TimeBase media | Mean (µs) | P99 (µs) |

|---|---|---|

| Topics | 1.91 | 4.09 |

| Durable Streams | 867 | 1550 |

Disclaimers

- TimeBase topics require additional CPU and memory resources.

- TimeBase topics have some operational overhead.

- Please consult your Deltix support team before using Topics in your setup.

Appendix A: Additional resources required to use TimeBase topics

CPU

One additional core, pinned to aeron-driver-conductor thread of Aeron Media Driver container

Memory

Aeron home directory should be mapped to tempfs (/dev/shm).

- name: Move Aeron directory to tmpfs

mount:

path: "{{ deltix_compose_dir }}/aeron"

src: tmpfs

fstype: tmpfs

state: mounted

opts: "size={{ 4 * 16 * number_of_topics}}M" # 3 aeron term buffers per topic, 16 mb default size of each

become: true

For each topic Aeron Media Driver container needs 16 3 Mb of RAM, plus extra 128Mb for driver's java process. Here we assume that default Aeron term buffer size 16Mb is not overridden at each topic level. We also multiply by 4 but strictly speaking only 3 term buffers are used by Aeron publication, this is just for reserve.

For example for 10 topics we need 16 3 10 + 128 = 768 Mb.

Note that same amount of memory should be added to all containers that use topics (Aggregator, TimeBase, Ember).

Acknowledgements

TimeBase Topics rely on Aeron library from Real Logic.