Ember Configuration Reference

Overview

The Ember can be used in diverse solutions: as a matching engine, as a FIX Gateway, as a platform to host trading SELL side and BUY algorithms and trading connectors, etc.

The module design and configuration flexibility make these diverse solutions possible.

Format

Ember configuration uses text files in the HOCON (Human-Optimized Config Object Notation) format described here.

HOCON is more flexible than the JSON (JavaScript Object Notation) format in that there are several ways to write valid HOCON. Below are two examples of valid HOCON.

Example #1:

settings: {

topic: "NIAGARA",

maxSnapshotDepth: 30 # This is a comment

}

Example #2:

settings {

topic = NIAGARA

maxSnapshotDepth = 30 // This is also a comment

}

If a HOCON configuration does not appear to be working, check the following.

- Curly brackets must be balanced. (Keep identation and formatting neat. This helps spot errors.)

- Quotation marks must be balanced.

- Duplicate keys that appear later take precedence (override earlier values).

General Pattern

The most pluggable services in Ember follow the declaration pattern shown below:

MYSERVICE {

factory: <fully-qualified-name-of-Java-implementation>

settings {

parameter1: value1 // custom

parameter2: value2 // parameters

}

}

This Spring Beans-like approach allows extending the system with custom-build services. See the Custom Service settings section for more information.

Configuration files

Most components have the default configuration described in the “template” configuration file ember-default.conf. The main configuration file ember.conf overrides and refines the default configuration. For example:

On startup, the Ember searches the following locations for configuration files:

- Java System properties (For example,

-Dember.home=/home/deltix/emberhome). - The file ember.conf in Ember home directory.

- The resource ember.conf in CLASSPATH.

- Resource ember-default.conf.

The last resource (ember-default.conf) is shipped with installation and defines default values for all server configuration parameters.

Configuration secrets

Currently, there are several methods of storing sensitive information in configuration files (passwords, API keys, etc.).

- Secret as a Service: Ember is integrated with:

- Hashicorp Vault - This separately installed service is free to use for smaller deployments. See this article for more information.

- AWS Secret Manager. See this article for more information.

- Azure Key Vault. See this article for more information.

- Hashing Passwords can be hashed using a special utility (

bin/mangle). Ember automatically detects an encrypted secret and decrypt it at runtime. See this article for more information. - Environment variables. Sometimes secrets can be securely injected into environment variables. You can reference environment variables in HOCON using

${ENV_VARIABLE_NAME}notation.

Configuration Reference

TimeBase

Almost every deployment of Ember requires the Deltix TimeBase service:

timebase.settings {

url = dxtick://timebasehost1:8011

}

If your TimeBase has User Access control enabled, you need to define them as well:

timebase.settings {

url = dxtick://timebasehost1:8011

username = ember

password = EVcec95166e01fcf5792b8fc215a5dfb62 # NB: encrypted

}

If TimeBase is configured to use TLS(SSL), use dstick:// in URL schema:

timebase.settings {

url = dstick://timebasehost1:8011

}

When using self-signed certificate on TimeBase server side be sure to configure Ember per instructions here (as TimeBase client).

OAUTH2 TimeBase connection

When TimeBase is using OAUTH2 authentication, use the following configuration stanza to define connection parameters:

timebase {

factory = "deltix.ember.app.Oauth2TimeBaseFactory"

settings = null

settings {

url = "dxtick://localhost:8011"

oauth2url = "http://localhost:8282/realms/timebase/protocol/openid-connect/token"

clientId = "client id goes here"

clientSecret = "client secret" # use hashed value or vault

scope = "api://quantoffice/shell openid profile" # optional

}

}

For more information about configuring OAUTH2 authentication read documentation for TimeBase and TimeBase Admin.

OMS settings

Validation Logic

The configuration stanza engine.validation configures various parameters that affect the validation of inbound trading requests:

- allowCancelOfFinalOrders – When set to true, OMS rejects attempts to cancel orders that are in a completed state. By default, ember forwards such requests to a destination venue even if the order seems to be complete (according to state maintained by Ember OMS).

- allowFastCancel - Allows cancelling of unacknowledged orders (some destination venues does not allow this).

- allowFastReplace - Allows replacing of unacknowledged orders or orders that have pending replacement requests (pessimistic/optimistic approach to cancel replace chains).

- maxPendingReplaceCount – When fast replace is allowed, this parameter controls how many order replacement requests may be pending (unacknowledged by order destination venue).

- pricesMustBePositive – When set, OMS rejects orders that have a negative limit price (negative prices may be normal in some markets, e.g., exchange-traded synthetics). Default value is true.

- maxOrderRequestTimeDifference – This parameter helps to detect order requests that spent too much time in transit to Ember OMS. Make sure the order source periodically synchronizes the local clock with some reliable source. This setting was removed in Ember 1.10.38+. You can now control this behavior via a similar setting in the FIX Order Entry gateway.

- allowReplaceTrader – Allows modification of order’s trader (e.g., to follow the CME tag 50 requirement). When the modification of an order’s trader is allowed, the Trader projection cannot be used in risk rules.

- allowReplaceUserData - Allows modification of an order’s user data (e.g., when users put some free text notes into this field). When the modification of an order’s user data is allowed, the UserData projection cannot be used in risk rules.

- nullDestinations – An array of destinations that are no longer needed but might have accumulated orders and positions (this setting simply suppresses ember startup warnings).

The following example shows default values for each parameter:

engine {

validation {

settings {

allowCancelOfFinalOrders = true

allowFastCancel = true

allowFastReplace = false

pricesMustBePositive = true

maxPendingReplaceCount = 10

}

}

nullDestinations = [ “CME”, “ILINK2” ]

}

Order Router

Order Router is an OMS component that controls where trading requests flow. Normally, requests are routed according to their destination. The custom order router can handle requests with an undefined destination or even override the intended destination in certain cases.

Here is an example of a simple order router:

engine.router {

factory = "deltix.ember.service.engine.router.SimpleOrderRouterFactory"

settings {

defaultDestination = SIMULATOR

# ‘true’ routes ALL trading request to destination defined as defaultDestination

force: false

# if destination is not provided, tries to use request’s exchange as destination

fallbackToExchange: true

}

}

This is another example of a custom order router that re-routes trading requests that are designated to KRAKEN to one of the trading connectors, depending on the trader’s group:

engine {

router {

factory = "deltix.ember.service.engine.router.custom.TraderGroupOrderRouterFactory"

settings {

interceptedDestination = "KRAKEN"

traderGroupToDestination : [

"NewYorkGroup : KRAKEN-US",

"LondonGroup : KRAKEN-GB",

"SingaporeGroup : KRAKEN-SG"

]

}

}

}

Changing the router on a production system may result in a change in system behavior:

All historical trading requests that relied on the previous routing destinations are routed according to the new router logic after restart.

Cache

The configuration stanza engine.cache allows tuning internal OMS cache parameters:

engine {

cache {

orderCacheCapacity = 16K

maxInactiveOrdersCacheSize = 4K

initialActiveOrdersCacheSize = 4K

initialClientsCapacity = 16

hashFunction = DEFAULT

mapType = CHAINING

}

}

Where:

- initialClientsCapacity – How many sources of orders to expect (not a limit, just a hint).

- initialActiveOrdersCacheSize – How many active orders to expect (not a limit, just a hint).

- orderCacheCapacity – Defines the total expected order count that ember keeps in memory at any moment in time (not a limit). This is a hint to the order pool to pre-allocate a given number of blank order instances.

- maxInactiveOrdersCacheSize – Defines how many inactive (REJECTED/CANCELLED/COMPLETELY_FILLED) orders ember keeps in memory per order source.

- hashFunction - Defines hash function used, one of DEFAULT | NATIVE | XXHASH | METRO. Default is DEFAULT. Since Ember 1.15.

- mapType - Defines underlying data structure used for cache, one of CHAINING | LINEAR_PROBING | ROBIN_HOOD (default is CHAINING). Since Ember 1.15

hashFunction = DEFAULT indicates that Java Default hash function will be used for the cache.

hashFunction = NATIVE indicates that native hash from this blog will be used for the cache.

hashFunction = XXHASH indicates that xxHash from implementation here will be used for the cache.

hashFunction = METRO indicates that metro hash from implementation here will be used for the cache.

Ember OMS reloads journal on startup, but once started it but relies solely on in-memory cache of orders. This cache contains all active and last N inactive orders for each order source. The idea is to be able to process rare cases of "fill after cancel". For some exchanges Fill events may take slightly longer path than other order events like Cancel (and hence may arrive out of normal order lifecycle sequence). This out-of-sequence fill usually happens within a second or two of order completion.

We suggest the following math: let's say each order source (each algorithm, or API client) sends us up to 1000 orders per second. We want to be able to process late fills reported within 5 seconds of order completion. In this case inactive (completed) order cache size is set to 5000. Default value is 4096

# Maximum amount of inactive (complete) orders to keep in cache (per source)

engine.cache.maxInactiveOrdersCacheSize = 5000

We strongly advise not to set this to larger numbers - increased cache size leads to excessive memory consumption and slows down OMS order processing.

Engine Order Transformer

You can define a custom transformer of in-bound order requests.

Here is an example:

engine {

transformer: {

factory = …

settings {

…

}

}

}

Use this option with care. Ember stores the result of this transformation into Ember Journal. There is no durable trace of the original order request.

Transformation of inbound events or other message types is not yet supported.

Custom Instrument Metadata

In some rare cases, the engine needs access to additional information about each instrument. For example, a custom risk rule may want to keep track of some extra information like the instrument industry sector or per-exchange minNotional. This can be achieved using a custom Instrument Factory:

engine {

instrumentInfoFactory {

factory = "deltix.ember.service.engine.CustomExtendedInstrumentInfoFactory"

settings {}

}

}

Error Handling

The engine’s configuration stanza exceptions {} defines the system reaction to abnormal situations.

TimeBase Disconnect

Ember components (algorithms and connectors) can recover from the loss of a TimeBase connection, however, there may be a gap in market data, message loss in output channels, and other side effects. You can define how Ember should react to the loss of a TimeBase connection:

engine.exceptions.timebaseDisconnect = HaltTrading

Possible values are:

Continue- Logs the error, let Ember recover.HaltTrading- Halts Trading (forces the operator to confirm that the system fully recovered on TimeBase reconnect).Shutdown- Graceful Ember shutdown.ShutdownLeaderWithFollower- Shutdown when leader-with-follower, continue when follower, or leader without follower.

Prior to Ember 1.10.14:

exceptions.haltOnTimebaseLoss (true)- Halts trading every time Ember loses its TimeBase connection.

Misc parameters

This section describes the remaining parameters. If you want to change these, you can place them inside the engine {} configuration stanza:

maxNumberOfRequestErrorsToLog (64) - Threshold on the amount of exceptions Ember prints into the output log (subsequent errors are logged as request reject reasons on messages). The engine has a performance counter for it.

maxInactiveUnknownWarningsToLog (16) - Maximum number of warnings about events for no-longer active (or unknown) orders. These events cannot be processed. The engine has a performance counter for it.

maxRiskVetosToLog (16) - Maximum number of risk rule vetos (order rejects) to log. The engine has a performance counter for it.

convertStatusEventsToNormalEvents (true) - A "do not send Order Status to algorithms" event. Convert them to normal events that report incremental differences.

useMaxRemainingQuantity (false) - Instruct OMS to enrich messages using

maxRemainingQuantityrather than the remaining quantity of the working order. Slower.allowUnrestrictedPositionRequests (false) - By default system is configured in "single API client can only see own positions" mode. Clients are identified by API keys that are mapped to order Source IDs hence by default Source ID is a required part of projection when requesting positions. When this security-driven restriction is removed, different API Clients can see the positions of each other (as well as system-wide positions).

Security Metadata (Instruments)

By default, Ember uses the local TimeBase stream “securities” to learn about Security Metadata (AKA security master). This stream is supported by most QuantServer/QuantOffice/CryptoCortex ecosystems.

On startup, Ember reads a copy of the security metadata from the “securities” stream. During run time, Ember watches this stream for updates (only for inserts/updates; deletes are ignored). You can override the stream name as follows:

instruments {

subscription {

stream = "securities"

filter = null # SELECT * FROM $stream WHERE $filter

}

useCentralSMD = true

}

Instrument filter

You can also define a filter that is used to skip some instruments. For example, the following line excludes instruments that do not have a TradeableSymbol column defined:

instruments.subscription.filter = "TradeableSymbol != NULL"

Instrument attributes filter

By default Ember loads all available non-empty instrument attributes. The non-standard attributes are exposed as key-value style custom attributes in case they are required for some custom logic. If security metadata stream contains a lot of unneeded custom attributes, you could optimize memory usage by by limiting the number of custom attributes loaded for each instrument. For example, you can do this by explicitly listing only the custom attributes that should be loaded:

instruments.subscripion.includeCustomAttributes = [ "minNotionalApplyToMarket" ]

minNotionalApplyToMarket is the only custom attribute used by Ember at this point. So unless your custom logic is using other customer attributes, you could safely apply this setting.

Instead of whitelisting custom attributes you could also blacklist them as follows:

instruments.subscripion.excludeCustomAttributes = [ "someUnneededAttribute" ]

Central Security Master

Ember can also provide an API wrapper to access the Deltix Central Security MetaData service. This centrally maintained service contains detailed information about FX, Crypto, and some FUTURE markets. This information can be used by algorithms like the Smart Order Router. If your environment has no access to the internet, or is not covered by this service, you can disable this feature in the following way:

instruments.useCentralSMD = false

Security Metadata Watch service

By default Ember loads all instruments from "securities" stream on startup and keeps live TimeBase cursor that listens for any changes (mostly additions of new instruments).

However when Ember is used in tandem with Universe Configurator you may want to switch to canonical approach: whoever wants to change "securities" stream must do it under write lock, Ember is notified every time write lock on "securities" is released and performs full re-load of instruments under read lock. To enable this mode add the following configuration stanza to your ember.conf:

instrumentsWorker {

factory = "deltix.ember.service.smd.CanonicalInstrumentServiceWorkerFactory"

settings {

}

}

Advantages of canonical approach - this is how other TimeBase clients watch securities changes.

Disadvantages of canonical approach - it is less efficient, it results in full reload of securities stream on any change.

Pricing Service

While trading algorithms receive market data prices directly from TimeBase, other components like position and risk use a pricing service. The pricing service provides access to recent BBO prices. This is a performance trade off - a direct subscription for market data requires extra CPU and memory resources.

Here is an example of a pricing service configuration for a simple case when market data comes from three TimeBase streams:

pricing {

settings {

liveSubscription.streams = [COINBASE, BINANCE, BITFINEX]

}

}

Alternatively, if market data is distributed via high-performance TimeBase topics, configuration looks like this:

pricing {

settings {

liveSubscription.topics = [COINBASE, BINANCE, BITFINEX]

}

}

Topics and Streams cannot be mixed.

If market data streams or topics have a lot of irrelevant instruments, you can limit your subscription by specifying what symbols and/or types of messages you want to subscribe for.

pricing {

settings {

liveSubscription {

streams = [COINBASE, BINANCE, BITFINEX]

symbols = [BTCUSD, LTCUSD, ETHUSD]

types = [deltix.timebase.api.messages.universal.PackageHeader]

}

}

}

Settlement Prices

The pricing service also provides previous day settlement prices for interested consumers.

To enable this data, add the settlementPriceSubscription configuration stanza as shown in this example:

pricing {

settings {

…

settlementPriceSubscription {

streams: [ "settlementPrices" ]

symbols: [ "TSLA", "AMZN", …] // Optional, all symbols if omitted

types: [ "deltix.timebase.api.messages.TradeMessage" ] // Optional, all message types if omitted

}

}

}

Ember expects the settlement prices stream to have the last known message broadcast enabled (so-called TimeBase unique streams).

Ember can extract settlement prices from traditional TradeMessages that have been around since QuantServer 1.0 or from the Universal Market Data format introduced in version 5.X. (In the latter case, StatisticsEntry[type = SETTLEMENT_PRICE] is used).

Historical Prices

The pricing service receives and caches live market data. However, for traditional markets that have periodic trading sessions, or for rarely traded instruments, there may be a need to warm-up a cache of BBO prices using historical data. Warm up is performed during server startup. The following configuration snippet shows how to warm up the pricing service with 1 hour of historical data:

pricing {

settings {

liveSubscription {

streams = [COINBASE, BINANCE, BITFINEX]

}

historicalDepth = 1h # specifies duration

}

}

A historical subscription can be fine-tuned with its own subscription stanza:

pricing {

settings {

liveSubscription {

streams = [COINBASE, BINANCE, BITFINEX]

symbols = [BTCUSD, LTCUSD, ETHUSD]

}

historicalSubscription {

streams = [COINBASE, BINANCE, BITFINEX]

symbols = [BTCUSD]

}

historicalDepth = 3d # specifies duration

}

}

Mockup Pricing Service

In some rare cases, you may need to run Ember without real market data.

There is a mockup version of a pricing service that can be configured as follows:

pricing {

factory = "deltix.ember.app.price.FakePricingServiceFactory"

settings {

fixedPrices: {

BTCUSD: 3909.70

ETHUSD: 133

LTCUSD: 31.899

LTCBTC: 0.008148

BCHUSD: 170.27

BCHBTC: 0.046

ETHBTC: 0.034045

XRPUSD: 0.39287

}

}

}

Main currency

The pricing service also helps some OMS and disk components with currency conversion. Risk limits, like the Net Open Position limit, covert positions in each individual currency to the main currency. The default main currency is USD.

pricing {

…

settings {

…

mainCurrency: USD

}

}

Advanced Pricing Service Settings

pricing {

settings {

exchanges = 32 # how many exchanges L1 NBBO aggregator can expect at most

useFullOrderBookProcessor = false # false = process only snapshots but save system resources; true = process every message, NBBO L2

reconnectDelay { # TimeBase reconnect settings: exponential delay with base 2

min = 5s

max = 60s

}

}

}

Algorithms

This section describes the configuration options for deploying Ember algorithms. See the Algorithm Developer’s Guide for more information about algorithm capabilities.

Algorithms are the building blocks of the custom business logic inside Ember. The following example shows the configuration of the BOLLINGER algorithm. As you can see below, each algorithm must be defined under the algorithms { } stanza.

algorithms {

BOLLINGER: ${template.algorithm.default} {

factory = deltix.ember.service.algorithm.samples.bollinger.BollingerBandAlgorithmFactory

settings {

bandSettings {

numStdDevs: 1.5

commissionPerShare: 0.001

openOrderSizeCoefficient: 1

profitPercent: 20

stopLossPercent: 40

numPeriods: 100

enableShort: true

enableLong: true

}

}

subscription { # input channels

streams = ["sine"]

symbols = ["BTCUSD", "LTCUSD"]

types = ["deltix.timebase.api.messages.TradeMessage"]

typeLoader: {

factory: deltix.ember.algorithm.pack.sor.balance.SORMessageTypeLoaderFactory

settings { }

}

}

}

}

Each algorithm configuration stanza has the following parts:

- Algorithm identifier: BOLLINGER or NIAGARA in this case. This identifier must be a valid

ALPHANUMERIC(10)text value. Basically, the identifier may use capital English letters, digits, and punctuation characters, and must not exceed 10 characters (See Deltix Trading Model for more information about theALPHANUMERIC(10)data type). - Algorithm Factory Class: This parameter tells Ember where an algorithm’s code can be found during startup.

- Settings: This configuration block defines the custom deployment parameters of each algorithm. Each setting in this block corresponds to a property of algorithm factory class.

- Subscription: If an algorithm uses a standard way of receiving market data, this section references the source of it. Typically, this is a set of TimeBase Streams or Topics that broadcast market data inside Deltix infrastructure. Optionally, a subscription can be limited to a specific set of instruments (defined by the

symbolsparameter).

Let's review one more example of an algorithm definition:

NIAGARA: ${template.algorithm.NIAGARA} {

settings {

symbols: ${contractsString}

bookSnapshotInterval: 15s

maxSnapshotDepth: 64

feedTopicKey = "l2feed" #output channel

validateFeed = true

}

}

Algorithm Market Data Subscription

In the above sample, the subscription stanza defines what market data is available to an algorithm.

Note the difference between Stream-based and Topic based market data configuration - streams are defined inside subscription { } stanza and can include symbol and message type filters. Algorithm cannot define symbol or message type filters for topics - you have to consume entire topic feed.

An algorithm can subscribe for market data from TimeBase streams:

BOLLINGER: ${template.algorithm.default} {

subscription {

streams = ["stream1", "stream2"]

symbols = ["BTCUSD", "LTCUSD"]

types = ["deltix.timebase.api.messages.TradeMessage"]

channelPerformance = MIN_CPU_USAGE

allInstruments = false

}

}

A subscription stanza may have the following optional parameters:

- symbols - Defines a symbol filter (a list of instrument symbols that the algorithm receives, usually a subset of what is available in the market data streams). If this parameter is missing or set to null then algorithm will subscribe for all symbols in the provided TimeBase streams. Special case is empty symbols array - this configuration will deploy algorithm with empty initial subscription (the algorithm may still add symbols during runtime).

- or missing subscription stanza deploys an algorithm without market data.

- types - Defines a type filter - a list of message types that the algorithm receives. When this setting is skipped, the algorithm gets messages of all types. Types are identified by the TimeBase type name, which usually matches the name of the corresponding Java message class, for example "

deltix.timebase.api.messages.TradeMessage". - typeLoader - Defines a custom TimeBase type loader. Type loaders allow mapping TimeBase message types to Java message classes available in the Ember CLASSPATH. The type loader is defined by the factory class (as usual for config). See the Type Loader section in Algorithm Developer's Guide for more information.

- channelPerformance - defines TimeBase market data cursor operation mode. Possible values are:

MIN_CPU_USAGE- Prefer to minimize CPU usage. Don't do anything extra to minimize latency (default mode).LOW_LATENCY- Prefer to minimize latency at the expense of higher CPU usage (one CPU core per process).LATENCY_CRITICAL- Focus on minimizing latency even if this means heavy load on CPU. Danger! Read Timebase cursor API before using.HIGH_THROUGHPUT- Prefer to maximize messages throughput. For loopback connections IPC communication will be used.

- allInstruments - normally when algorithm tracks instrument metadata (from "securities" TimeBase stream) only for instrument set that matches market data subscription. This flag configures algorithm to track instrument metadata for all symbols in securities stream. In other words, when "allInstruments = true", algorithm will track securities metadata for all available instruments in configured securities stream. When "allInstruments = false" or not specified, algorithm will only track securities metadata for instruments that it is subscribed to.

Subscription Examples

In the following example algorithm subscribes to AAPL and MSFT instruments from the NASDAQ TimeBase market data stream:

subscription {

streams = ["NASDAQ"]

symbols = ["AAPL", "MSFT"]

}

In the following example algorithm subscribes to market data for all symbols (all instruments) from NASDAQ stream:

subscription {

streams = ["NASDAQ"]

}

Identical configuration:

subscription {

streams = ["NASDAQ"]

symbols = null

}

In many cases, trading algorithms only need market data for instruments that this algorithm wants to trade. Avoid subscribing to entire stream of market data where possible, as this may be wasteful.

In the next example you can see initially empty market data subscription:

subscription {

streams = ["stream1", "stream2"]

symbols = [] # empty filter means "no market data initially"

}

an algorithm may control market data subscription during runtime using special API.

And finally, algorithms that do not need market data may skip the subscription section completely:

NOMARKET: ${template.algorithm.default} {

// subscription block is not present

}

Market Data from TimeBase Topics

As an option, an algorithm may receive market data from a TimeBase topic:

BOLLINGER: ${template.algorithm.default} {

topic = "topic1"

}

or multiple topics:

BOLLINGER: ${template.algorithm.default} {

topics = ["topic1", "topic2"]

}

There is no per-symbol filtering capability for topics.

Key differences:

- Topics are defined outside the subscription stanza

- Topics cannot define type/symbol filters. The entire content of the topic is fed into the algorithm.

Starting from Ember version 1.14.67 algorithm receive data from both topics and streams:

BOLLINGER: ${template.algorithm.default} {

topics = ["topic1", "topic2"]

subscription {

streams = ["stream1", "stream2"]

}

...

Furthermore, Deltix Universe Configurator has special convention - when market data connector output name starts with % sign it automatically creates a topic with provided name (and also instructs TimeBase to keep carbon-copy stream. For example, when Coinbase exchange connector uses %COINBASE as output name, the data will be broadcasted into %COINBASE TimeBase topic and also recorded into COINBASE TimeBase stream.

Ember supports this naming convention. In the following configuration algorithm will be receiving market data from to %COINBASE Topic.

BOLLINGER: ${template.algorithm.default} {

subscription {

streams = ["%COINBASE%"]

}

...

This was done to simplify conversion of existing setups from streams to topics for market data delivery. One can simply add % prefix to stream name in Universe Configurator and Ember configuration to switch existing setup to Topic. More about this subject can be found in Algorithm Developer's Guide.

Algorithm Configuration Template

The template define low-level settings available for each algorithm. For example:

- CPU idle strategies

- Default order cache capacity

- Interval of reconnection to TimeBase market data in case of failures

template.algorithm.default {

queueCapacity = ${template.queueCapacity.default}

idleStrategy = ${template.idleStrategy.default}

# exponential delay with base 2

reconnectDelay {

min = 5s

max = 60s

}

settings {

orderCacheCapacity = 4096

maxInactiveOrdersCacheSize = 1024

initialActiveOrdersCacheSize = 1024

initialClientsCapacity = 16

}

}

To be accurate, we need to mention that in the first example, NIAGARA used a custom template. Algorithms like NIAGARA that Deltix provides out-of-the-box typically have a default set of settings defined in ember-default.conf.

Here is how this looks:

template.algorithm.NIAGARA: ${template.algorithm.default} {

factory = deltix.ember.quoteflow.niagara.NiagaraMatchingAlgorithmFactory

settings {

ackOrders: false

preventSelfTrading: true

approxNumberOfOrdersPerSymbol: 512

bookSnapshotInterval: 15s

maxSnapshotDepth: 100

feedTopicKey: null

feedStreamKey: null

marketCloseInterval: 0s # debug only

validateFeed: false # Enables Data Validator of market data feed published to stream/topic

}

}

Here is another example of deploying a PVOL algorithm using the template:

PVOL: ${template.algorithm.default} {

factory = "deltix.ember.algorithm.pack.pvol.PVOLAlgorithmFactory"

settings {

defaultOrderDestination = SOR

}

subscription {

streams = ["COINBASE", "BINANCE", "KRAKEN"]

symbols = [BTC/USD, LTC/USD, ETH/USD, LTC/BTC, BCH/USD, BCH/BTC, ETH/BTC]

}

}

Latency tracing

To enable latency tracing in your algorithm you need to add latencyTracer stanza like shown below:

algorithms {

PVOL: ${template.algorithm.default} {

...

latencyTracer {

statInterval = 15s

maxExpectedLatency = 100ms

maxErrorsToLog = 100

}

}

}

Here we are showing how to do this for PVOL algorithm.

- statInterval - How often algorithm will collect latency metrics and publish them into counters (which exposes them to downstream processing in Prometheus/Grafana). when this parameter is set to zero (default) latency tracing is disabled.

- maxExpectedLatency - internally this component uses HDR histogram to record each latency measurement, so in order to store this in memory efficiently we need to know latency range. This parameter helps to define maximum expected latency value. Default is 100 milliseconds. When a specific latency recording exceeds this maximum, an error is logged and the value is overridden with given maximum.

- maxErrorsToLog - throttles how many errors the Latency Tracer can log. Default is 100. Once this value of log messages is reached further logging is suppressed.

- ordersUseOriginalTimestamp - Normally tick-to-order requests use

OrderRequest.originalTimestampto correlate order submission time with tick time. That is the Tracer exects that fieldoriginalTimestampcontains market data (tick) as-received timestamp. However in some cases this timestamp is not available. This setting switches this latency meter to use main timestamp field of the request (OrderRequest.timestamp). Default is 'true'. Do not flip this setting to 'false' unless you understand the nature of your market data. - warmupCount - After system restart exclude first group of orders specified by this count from measurement. JVM Warm Up. Default is 1000.

In most cases you do not really need to change most of these settings. The simplest way to turn on latency tracing is to write

algorithms {

PVOL: ${template.algorithm.default} {

...

latencyTracer {

statInterval = 15s

}

}

}

Or in shorter form:

algorithms.PVOL.latencyTracer.statInterval = 15s

Latency values are reported in nanoseconds.

Trading Connectors

Trading connectors are configured like algorithms. Key differences:

- Each connector typically requires connection parameters to the execution venue to be explicitly defined. This could range from FIX Session credentials for FIX-based venues, to API keys for crypto venues. Remember that you can hash sensitive information or use the secrets as a service approach described above.

- To make each connector configuration more compact, Deltix provides connector configuration templates that predefine most common configuration parameters. For example, an API gateway host and port or trading session schedules.

Here is an example of a connector configuration:

include classpath("fix-connectors.conf") // (1)

connectors {

ES : ${template.connector.fix.es} { // (2)

settings : {

host = localhost

port = 9998

senderCompId = EMBER

targetCompId = DELTIX

password = null

execBrokerId = "TWAP"

schedule = {

zoneId = "America/Chicago"

intervals = [

{

startTime = "17:00:00",

endTime = "16:00:00",

startDay = SUNDAY,

endDay = FRIDAY

}

]

}

}

}

}

Notes:

- Here we import connector configuration templates.

- This line defines the trading connector “ES” based on the

connector.fix.esconfiguration defined in above template file.

See the Trading Connector Developer’s Guide for more information.

Fee Schedule

You can define a custom fee schedule (commissions) for a trading connector. See the Deltix Commission Rules Engine document for more information.

Here, we just provide a sample of a configuration with this feature:

COINBASE: ${template.connector.COINBASE} {

...

feeSchedule {

fees: [

{ tradingVolume = 0, takerFee = 0.25, makerFee = 0.15 }

{ tradingVolume = 100000, takerFee = 0.20, makerFee = 0.10 }

{ tradingVolume = 1000000, takerFee = 0.18, makerFee = 0.08 }

{ tradingVolume = 10000000, takerFee = 0.15, makerFee = 0.50 }

{ tradingVolume = 50000000, takerFee = 0.10, makerFee = 0.00 }

// etc ...

]

}

}

Fee Conversion

In addition to computing an exchange commission (fees), Ember can be configured to convert commissions to the order currency (term/quoted currency). For example, for BINANCE, the commission is provided in BNB currency. This feature converts all BNB-based commission amounts to the base currency of the trade. For example, BTC for a LINK/BTC trade, or USDT for a BTC/USDT trade:

BINANCE: ${template.connector.binance} {

…

feeConversion: true

}

DMA vs. Non-DMA Connectors

Most trade connectors provide direct access to the market (execution venue). We call these connectors DMA (Direct Market Access) connectors. In some special cases, an Ember connector may send orders to a remote destination that is served by another Ember instance. The difference may be important for risk computations.

For example, we may have a distributed system where a NY4 Ember server (hosted in Secaucus, NJ) that submits orders to the Nasdaq and NYSE exchanges, but also sends orders to another Ember Server co-located with CME (hosted in Aurora, IL). In this case, the NY4 Ember may want to consider the NYSE and NASDAQ connectors as DMA, but consider the CME connector, (which is actually a “proxy” connector to another Ember instance), as non-DMA.

By default, all connectors are considered DMA. If you want to change that, use the isDMA flag in the connector configuration:

CME: ${template.connector.delitxes} {

isDMA: false

settings { ... }

}

Please note that connectors may transmit either simple orders (MARKET, LIMIT, etc.) or complex algo orders (e.g., TWAP). The former orders are sometimes called DMA orders. There is some similarity, but these notions are not related: a DMA connector may send algo orders; also, a non-DMA connector may send DMA orders.

Trading Simulators

The Ember includes two simulators:

SIM - Executes limit orders according to their price and supports simple play scripts. SIM can be enabled as follows:

connectors {

SIM: ${sim}

}More information about SIM can be found here.

SIMULATOR - Uses market data to realistically execute orders. Note: This simulator is actually an execution algorithm.

algorithms {

SIMULATOR: ${template.algorithm.SIMULATOR} {

subscription {

streams = ["COINBASE", "BINANCE", "BITFINEX", "GEMINI", "KRAKEN"]

}

}

}More information about the SIMULATOR can be found here.

Ember Monitor Balance Publisher

This Ember Monitor backend component, when present, streams information about available balance on exchanges to WebSocket subscribers (such as Ember Monitor app). It relies on TimeBase stream produced by similarly named Balance Publisher (also known as Exchange Publisher). EmberMonitor displays available per-exchange balances on Exchange/Currency position tables inside Positions panel.

Configuration stanza below describes what TimeBase should be used, in which TimeBase stream to find balance information, and how often WebSocket subscribers should be updated. Default values should work for most cases:

balancePublisher {

timebaseUrl = ${timebase.settings.url}

streamKey = balances

publishInterval = 15s

}

Risk and Positions

There is a separate document that describes how positions are tracked and how risk limits are applied.

Here, we simply show one example of such a configuration:

risk {

riskTables: {

Destination/Currency: [MaxOrderSize, MaxPositionLong, MaxPositionShort]

Trader/Destination/Currency: []

TraderGroup/Destination/Currency: [MaxOrderSize,MaxPositionLong,MaxPositionShort]

Trader/Symbol: [MaxOrderSize,MaxPositionLong,MaxPositionShort]

}

allowUndefined: [Account, Exchange, Trader]

timeIntervalForFrequencyChecks: 1m

dailyPositionsResetTime: "17:00:00"

timeZone: "America/New_York"

}

See the settings section in the Risk and Positions tutorial for more information on these settings.

FIX API Gateway

The FIX Gateway configuration consists of three main pieces:

- The client database that defines where FIX sessions are defined

- The Market Data gateway that broadcasts market data to subscribers using FIX protocol

- The Order Entry gateway that processes trading flow from clients using FIX protocol

Market Data and Order Entry FIX Gateways implement Executable Streaming Prices (ESP) data flow. There is a forth component - RFQ Gateway that may compliment or replace Market Data gateway and implement RFQ/RFS flow.

Here is an example of a simple configuration:

gateways {

clientdb { // (1)

factory = deltix.ember.service.gateway.clientdb.test.TestGatewayClientDatabaseFactory

settings {

clientCount = 50

tradeCompIdPrefix: "TCLIENT"

tradeBasePort: 9001

marketDataCompIdPrefix: "DCLIENT"

marketDataBasePort: 7001

}

}

marketdata { // (2)

PRICEGRP1 {

settings {

topic: "NIAGARA"

}

}

}

trade { // (3)

TRADEGRP1 {

}

}

}

Remember that gateway names should use alphanumeric names (all capital, not more than 10 characters).

FIX Market Data Gateway

gateways {

marketdata {

MD1 {

settings {

host: "10.10.1.15" # interface on which gateway opens server socket

senderCompId = DELTIX

topic: "DARKPOOL" #Alternative: streams: [“CBOT”, “CME”, “NYMEX”]

maxLevelsToPublish = 64

minimumUpdateInterval = 50ms

outputFeedType = LEVEL_2

useSyntheticLevel2QuoteIds = true

schedule = {

zoneId = "America/New_York"

intervals = [

{

startTime = "17:05:00",

endTime = "17:00:00",

startDay = SUNDAY,

endDay = SUNDAY

}

]

}

}

}

MD2 {

..

}

}

}

Settings

Brief description of Market Data gateway settings:

- host - server network interface to bind to. Will bind to all if unspecified. Note that each FIX session will use unique port (see client database description).

- senderCompId - specifies FIX tag SenderCompId(49) to be used by FIX session. Default is "DELTIX".

- topic or streams - specifies where market data will come from (single TimeBase topic or one or more TimeBase streams).

- maxLevelsToPublish - See FIX Gateway documentation.

- expectedMaxBookLevels - See FIX Gateway documentation

- maxInputLevels - See FIX Gateway documentation

- minimumUpdateInterval - for snapshot-only market data gateway mode this parameter controls throttling.

- types and symbols - limit market data that market gateway to specific message types and contract symbols. No filtering by default.

- nanosecondTimestamps - configures gateway to use include nanoseconds in UTCTimestamp tags like TransactTime(60) and SendingTime(52).

- incrementalMode

- addTransactTime

- addExchangeId - see "Exchange ID in Quotes" section below

- addTradeId - enables transaction ID in trades (communicated via MDEntryID(276) tag)

- transportIdleStrategy

You may configure multiple Market Data Gateways with different data sources, order book depth, etc.

Additional information about Market Gateway configuration parameters

Market Data Source

Each Market Data gateway can re-broadcast market data from one or several TimeBase streams, or a single TimeBase topic.

For TimeBase streams, pass a list of stream keys. Also, a TimeBase streams subscription can define optional filters by symbols or TimeBase message types (e.g., to filter out only Level2 market data for streams that contain both Level1 and Level2).

For example:

marketdata {

MD1 {

settings {

streams: [ "CBOT", “NYMEX” ]

symbols: “CLZ20, CLF21, CLG21, CLH21, CLJ21”

types: [ “deltix.timebase.api.messages.universal.PackageHeader”]

...

The symbols filter is optional.

Market Data Type

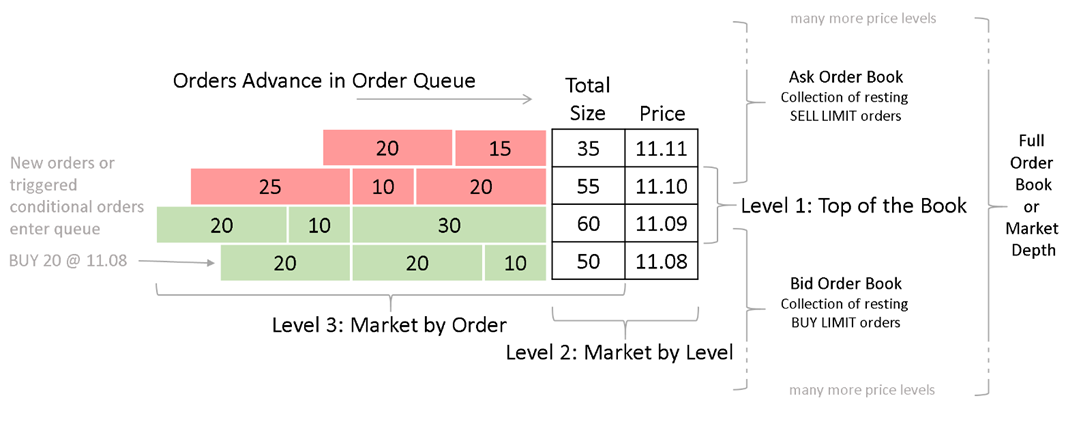

The following market data types are supported as inbound/outbound feeds:

- Level 3 – Market by Order: This is the most detailed representation of a market state where one can see individual orders sitting on the market.

- Level 2 – Market by Level: One can see how much liquidity in total we have at each price level. In this case, all same-priced orders are grouped into a single market data entry and their combined quantity is reported for each price level.

- Level 1 – Top of the Book: One can only see the best bid and best ask (BBO). The same as Level2 with the Order Book Depth limit set to 1.

Inbound Market Data Type

Configuring the primary type of inbound market data helps the internal order book processor in situations where a TimeBase stream or topic contains a mix of Level1/Level2/Level3 data. This parameter is optional. By default, the processor tries to auto-guess the inbound feed type.

marketdata {

MD1 {

settings {

inputFeedType: LEVEL_3

Outbound Market Data Type

You can configure the output type of market data.

Output data cannot use a more detailed level than the input data.

For example, the following configuration snippet configures the Level2 output format (Market By Level):

marketdata {

MD1 {

settings {

outputFeedType: LEVEL_2

Snapshots Only vs. Incremental Feed

The Deltix Market Data gateway supports two alternative market data feed distribution modes:

Periodic Snapshots only – Broadcasts Market Data Snapshot/Full Refresh (W) messages only. This mode is optimized for the simplicity of client use and a large number of connected clients this mode. It broadcasts snapshots of the order book (top N level) with a given interval (say 10 milliseconds).

marketdata: {

MD1 {

settings {

host: "0.0.0.0"

streams: "COINBASE"

minimumUpdateInterval = 10ms

maxLevelsToPublish = 150

outputFeedType = LEVEL_2

}

}

}Incremental Updates and Snapshots – Broadcasts both Incremental Refresh (X) and periodic Market Data Snapshots/Full Refresh messages (35=W). This mode is designed for low latency market data dissemination. This mode allow delivering every market data update to a smaller number of FIX clients. Most examples in this section describe a snapshots only gateway. This is an example of an incremental market data gateway configuration:

marketdata: {

MD1 : ${template.gateway.marketdata.incremental} {

settings {

host: "0.0.0.0"

streams: "NIAGARA"

expectedMaxBookLevels = 150

inputFeedType = LEVEL_2

outputFeedType = LEVEL_2

}

}

}The snapshot only mode is typically configured to re-broadcast order book snapshots on every market change (subject to throttling). The incremental mode only rebroadcasts changes to the order book. In the incremental mode, market data snapshots are sent to the new subscriber and with a large period (e.g., every 15 seconds).

The incremental market data mode requires:

- If the source market data is Level3, FIX output requires Level3. If the source market data is Level2, FIX output requires Level2. In other words, the parameter

inputFeedTypemust matchoutputFeedType - Order book depth in incremental mode cannot be controlled with the

maxLevelsToPublishparameter. MDG simply re-broadcasts the same order book depth it gets as an input.

Exchange ID in Quotes

The Market Data Gateway can publish exchange code in each market data entry.

marketdata {

MD1 {

settings {

addExchangeId: true

When this flag is set, each Market Data Entry in Market Data Snapshot / Full Refresh FIX Message (35=W) contains the MDEntryOriginatorID(282) tag. This tag contains the exchange code of the market quote with the given price and size.

This option can be used for all output types, (not just Level2), and is disabled by default. For example, when publishing a feed of single data sources, (such as a single matching engine), it makes no sense to publish the same exchange ID in each entry.

Consolidated Level2 Output

When combined with an exchange ID, the output type Level 2 produces a so-called consolidated market data feed.

marketdata {

MD1 {

settings {

addExchangeId: true

outputFeedType: LEVEL_2

By default, this flag is disabled. (The Market Data Gateway assumes a simpler configuration when we re-broadcast market data from a single source).

Consider the situation when two exchanges, A and B, both have the best price bid price on the market for a contract. Let us assume this price is $123.00. Imagine that exchange A has 10 lots at this price, and exchange B has 20 lots. That is, combined, we have 30 lots at $123.00.

Here is an output format example that shows a difference in output between these two formats:

| Consolidated Level2 (addExchangeId=true) | Aggregated Level2 output (addExchangeId=false) |

|---|---|

| MDEntry { side=bid, size=10, price=123.00, exchange=A } MDEntry { side=bid, size=20, price=123.00, exchange=B } | MDEntry { side=bid, size=30, price=123.00 } |

The consolidated feed mode respects the maximum order book depth limit (see the next section). In this case, the total number of entries from one side of the book, (including same-priced entries from different exchanges), counts towards this limit.

Order Book Depth

You can limit the maximum number of order book level (MDEntries on each side of the market) published by the Market Data Gateway.

marketdata {

MD1 {

settings {

maxLevelsToPublish: 10

Publishing too many levels for liquid markets can significantly increase bandwidth consumption.

Feed Aggregation Type

While this parameter can be inferred, you can explicitly define it to be one of the following:

- NONE - Order book that process market data only from single exchange. This mode is faster and uses less memory. Supported for L1, L2, L3 feeds.

- AGGERGATED - Aggregated view of multiple exchanges, you can see combined size of each price level. Supported for L2 feeds.

- CONSOLIDATED - Consolidated view on the market from multiple exchanges, you can see individual exchange sizes. Currently only supported for L2.

marketdata {

MD1 {

settings {

feedAggregationType: NONE

Compact order book

Performance feature introduced in December 2023 that uses fixed size bid/size arrays for book keeping. Supported for fixed depth single-exchange L2 order books only.

marketdata {

MD1 {

settings {

compact: true # default is false

...

Security Types

Special settings securityTypeMapping and customSecurityTypeMapping configure FIX mapping of security types to TimeBase Instrument types. The following example shows default mapping of standard instrument types that can be customized:

marketdata {

MD1 {

settings {

securityTypeMapping: [

{instrumentType: EQUITY, fixSecurityType: "CS"},

{instrumentType: INDEX, fixSecurityType: "XLINKD"},

{instrumentType: FX, fixSecurityType: "FOR"},

{instrumentType: FUTURE, fixSecurityType: "FUT"},

{instrumentType: OPTION, fixSecurityType: "OPT"},

{instrumentType: BOND, fixSecurityType: "BOND"},

{instrumentType: SYNTHETIC, fixSecurityType: "MLEG"},

{instrumentType: ETF, fixSecurityType: "ETF"},

]

}

}

}

In addition, you can define mapping for instruments that are represented by InstrumentType.CUSTOM:

marketdata {

MD1 {

settings {

securityTypeMapping: [ ... ]

customSecurityTypeMapping: [

{typeName: "TERM", fixSecurityType: "TERM"}, # Term Loan

{typeName: "STN", fixSecurityType: "STN"}, # Short Term Loan

{typeName: "CD", fixSecurityType: "CD"}, # Certificate of Deposit

]

}

}

}

Subscription by Security Type

Special setting enableSecurityTypeSubscriptions enables subscription to market data by security type (otherwise only subscription for individual instrument symbol is supported). When enabled MarketDataRequest(35=V) supports subscription using SecurityType(167). For example 167=FUT will subscribe for all FUTURE instruments configured in the system.

marketdata {

MD1 {

settings {

enableSecurityTypeSubscriptions: true # false by default

Statistic Entries

Special settings statisticsTypes and customStatisticTypes configure FIX mapping of market data statistics reported in market data messages into FIX (when supported by upstream feed):

marketdata {

MD1 {

settings {

statisticsTypes: [OPENING_PRICE, TRADING_SESSION_HIGH_PRICE, TRADING_SESSION_LOW_PRICE, TRADE_VOLUME, OPEN_INTEREST]

customStatisticTypes: [

{originalType="Previous_Close", entryType="e", valueTag=MDEntryPx},

{originalType="Turnover", entryType="x", valueTag=MDEntryPx},

{originalType="Trades", entryType="y", valueTag=MDEntrySize},

{originalType="Static_Reference_Price", entryType="6", valueTag=MDEntryPx},

{originalType="Trade_Volume_NumberOfOrders", entryType="B", valueTagInt=346}, # NumberOfOrders<346>

]

}

}

}

- statisticsTypes - enumerates list of standard statistics entry types supported out of the box (with pre-configured set of accompanying statistic value tags). For example, for TRADE_VOLUME market data entry type out-of-the box mapping populates tag MDEntryType(269)=B and observed trade volume into tag MDEntrySize(271).

- customStatisticTypes - defines custom MD Entry types as well as enrich standard statistics-related MD group entry with additional tags. For example, TimeBase entry type Trade_Volume_NumberOfOrders in the configuration shown above adds tag NumberOfOrders(346) to MDEntryType(269)=B.

Trading Session Mappings

Special setting tradeSessionStatusMapping allows to map trading session statuses reported via TimeBase TradingSessionStatusMessage into FIX status codes:

marketdata {

MD1 {

settings {

tradeSessionStatusMapping: [

{originalStatus = "Halt", tradSesStatus = 0},

{originalStatus = "Regular Trading", tradSesStatus = 1},

{originalStatus = "Opening Auction Call", tradSesStatus = 2},

{originalStatus = "Session Exit", tradSesStatus = 24}

]

}

}

}

Alternative Security ID

When TimeBase security metadata stream contains additional security identifiers like CUSIP or ISIN codes (defined as custom attributes) FIX Gateway may use this information in SecurityList API.

For example the following configuration:

marketdata {

MD1 {

settings {

securityAltIds: [

{columnName: "isin", securityIDSource: "4"},

{columnName: "ric", securityIDSource: "5"}

]

}

}

}

Will populate Security definition in SecurityList(35=e) message:

- NoSecurityAltId(454)=2

- SecurityAltId(455)=DE0007664039

- SecurityAltIdSource(455)=4 // ISIN

- SecurityAltId(455)=VOW3.DE

- SecurityAltIdSource(455)=5 // RIC

Transact Time

The Market Data Gateway can publish a market data timestamp with each message.

marketdata {

MD1 {

settings {

addTransactTime: true

When this flag is set, each Market Data Snapshot / Full Refresh FIX Message (35=W) contains the TransactTime(60) tag.

Warm Up

This optional parameter defines how far in the past the gateway starts reading market data (applicable only to durable streams as a market data source). This helps to warm-up the state of the internal order book processor in advance (before the gateway starts to serve market data subscription requests).

marketdata {

MD1 {

settings {

warmUpDuration: 15s

FIX Order Entry Gateway

gateways {

trade {

OE1 {

settings {

host: "0.0.0.0" # interface on which gateway opens server socket

senderCompId = DELTIX

schedule = {

zoneId = "America/New_York"

intervals = [

{

startTime = "17:05:00",

endTime = "17:00:00",

startDay = SUNDAY,

endDay = SUNDAY

}

]

}

}

}

OE2 {

...

}

}

}

FIX Order Entry Gateway Settings

- heartbeatInterval - Interval between FIX session heartbeat messages (default is 30s).

- testRequestTimeout - Timeout for a test request, after which our side considers the other side as disconnected (default is 45s).

- keepAliveTimeout - FIX session is terminated after this timeout if there were no inbound messages within a specified time (default 60s)

- authenticationTimeout - maximum wait time for asynchronous authentication (if configured). Affects LOGON timeout.

- logonTimeout - how long to wait for LOGON confirmation message

- logoutTimeout - how long to wait for LOGOUT confirmation message

- sessionReset - Enables/disables in-session sequence number reset support (default is true).

- sessionResetTimeout - Used by continuous FIX sessions (default is 5s).

- senderSeqNumLimit - Used by continuous FIX sessions to initiate an in-session sequence number reset (default is 1000000000).

- targetSeqNumLimit - Used by continuous FIX sessions to initiate an in-session sequence number reset (default is 1000000000).

- traderIdResolution - See the section below.

- unbindPortsWhenDisabled

- measureActiveTime - Enables a session's

ActiveTimecounter. Adds some CPU overhead (default is false). - sendEmberSequence - Appends the Ember Journal sequence number to outbound FIX messages that are execution reports (default is false).

- sendCustomAttributes - When enabled custom attributes from order events will be encoded as FIX tags in outbound messages gateway sends out (default is false). Note custom attributes specified on inbound FIX order requests are always parsed and up on internal ember request messages (also subject to customAttributesSet/maxCustomTag filter defined below).

- customAttributesSet - Comma-separated list of custom FIX tags that will be copied to OrderRequest.attributes, can provide tag range as well. For example "80,1024,6000-8999". This parameter also required

maxCustomTagparameter. - maxCustomTag - (Deprecated and no longer used in 1.14.234) This parameter defines upper bound for custom FIX tags that will be processed in inbound FIX messages and converted to custom order attributes inside Ember (default is 9999). This parameter should be used together with

customAttributesSet. - maxOrderRequestTimeDifference - This parameter helps detect order requests that spent too much time in transit to Ember OMS (default value of this parameteris 45 seconds). Make sure the order source periodically synchronizes the local clock with some reliable source.

- sessionSupportNewsMessage - suppress News(35=B) messages that notify FIX subscribers about trading connector status.

FIX Request Transformer

The Order Entry Gateway can transform inbound order requests before they are placed into the Ember OMS queue. This customizable logic can be used, for example, to correct the order destination.

Here is an example of a built-in transformer that modifies the Destination fields on each order request based on the specified Exchange.

Transformers are defined under the gateways.trade.<gateway-id>.settings section:

gateways {

trade {

OE1: {

…

settings {

transformer: {

factory = "deltix.ember.service.engine.transform.CaseTableMessageTransformerFactory"

settings {

rules: [

// (Destination1)? | (Exchange1)? => (Destination2)? | (Exchange2)?

"*|DELTIXMM => DELTIXMM|DELTIXMM",

"*|HEHMSESS1 => HEHMSESS1|HEHMSESS1",

"*|HEHMSESS2 => HEHMSESS2|HEHMSESS2"

]

}

}

}

}

}

}

Your hook must implement the Factory<deltix.ember.service.engine.transform.OrderRequestTransformer> interface.

QuantOffice integration

The Order Entry Gateway has an additional attribute, sendEmberSequence, that provides the FIX tag UpstreamSeqNum(9998) to simplify state recovery for the QuantOffice Strategy Server.

Trader ID Resolution strategy

By default, the gateway uses the FIX tag SenderSubId(50) from the inbound message trader identity. This method follows an approach chosen by other exchanges, like CME iLink. If you want to override this, you can use the traderIdResolution setting of each order entry gateway.

SESSION_ID– The FIX Session ID identified by the inbound tagSenderCompId(49)is used as the trader identity.SENDER_SUB_ID– This is the default method we described at the beginning of this paragraph. The value of the inbound FIX tagSenderSubID(50)is used as the trader identity.CUSTOMER_ID– The Ember configuration file provides atraderIdwith each FIX session.DTS_DATABASE– Each session may have one or more CryptoCortex User ID (GUID) associated with it by the CryptoCortex Configurator (new since Ember 1.8.14). In this case, the FIX tag SenderSubId(50) must match the CryptoCortex user ID (GUID). The FIX Gateway validates that the order's user is indeed associated with a specific FIX session.

Example:

gateways {

trade {

OE1 {

settings {

traderIdResolution: CUSTOMER_ID

...

FIX RFQ Gateway

FIX RFQ Gateway implement both Request For Quotes (RFQ) and Request for Stream (RFS) message flows. It is typically used in combination with Order Entry FIX gateway that takes previously quoted orders from RFQ/RFS quotes.

The following is a sample of RFQ gateway configration:

gateways {

rfq {

RFQ1 {

settings {

host: "10.10.1.15" # interface on which gateway opens server socket

senderCompId = DELTIX

inputStreamKey: "RFQ"

outputStreamKey: "RFQ"

schedule = {

zoneId = "America/New_York"

intervals = [

{

startTime = "17:05:00",

endTime = "17:00:00",

startDay = SUNDAY,

endDay = SUNDAY

}

]

}

}

}

RFQ2 {

..

}

}

}

See RFQ Data Model on TimeBase data flow involved in RFQ/RFS. Typically a special RFQ algorithm or RFQ-capable matching engine is involved in the flow.

FIX Session Schedule

For production environments, each FIX Gateway may define a FIX session schedule. A schedule can be defined for each Order Entry and Market Data gateway separately.

Starting from version 1.6.83, the FIX session schedule is optional. Meaning, the FIX gateway can operate 24/7, using in-session sequence number resets to periodically recycle sequence numbers.

Here is an example:

gateways {

trade {

OE1 {

settings {

// ... other settings here ...

schedule = {

zoneId = "America/New_York"

intervals = [

{

startTime = "17:05:00",

endTime = "17:00:00",

startDay = SUNDAY,

endDay = SUNDAY

}

]

}

}

}

}

}

That was an example of a weekly schedule. A daily schedule simply includes more intervals:

intervals = [

{

startTime = "17:05:00",

endTime = "17:00:00",

startDay = SUNDAY,

endDay = MONDAY

},

{

startTime = "17:05:00",

endTime = "17:00:00",

startDay = MONDAY,

endDay = TUESDAY

},

...

]

For clients who want to operate a FIX gateway 24/7, there are two limit parameters that force a sequence number reset:

gateways {

trade {

OE1 {

settings {

senderSeqNumLimit = 1000000000

targetSeqNumLimit = 1000000000

}

}

}

}

When these limits are reached FIX gateway side initiates in-flight sequence number reset, by sending special LOGON(A) message with ResetSeqNum(141)=Y tag.

Unless you define explicit schedule internals, FIX gateway works in the 24/7 mode.

Client Database

The FIX gateway supports various ways to define FIX sessions:

Test the configuration for stress tests - It is suitable for creating a large number (1000s) of nearly identical FIX sessions. You can see it in the example above (area marked with #1) where we configure 50 market data and order entry sessions.

Deltix CryptoCortex database. The CryptoCortex configurator GUI has a section (Operations/FIX Sessions) to define FIX gateways and their sessions. The following sample tells Ember to read it:

clientdb {

factory = deltix.ember.service.gateway.clientdb.jdbc.JdbcGatewayClientDatabaseFactory

settings {

driverClassName: "org.mariadb.jdbc.Driver"

connectionUri: "jdbc:mariadb://10.10.81.87:3306/CCDB"

username: "admin"

password: "EV3242ASEA3423FAE" #hashed

gatewayNames: "SLOWFIX" # comma-separated list

}

}The Ember configuration file may have a simple list of all the sessions. Sometimes, it is very convenient to define a few existing FIX sessions there. Here is an example:

clientdb {

factory = deltix.ember.service.gateway.clientdb.simple.SimpleGatewayClientDatabaseFactory

settings {

OE1: [

{

senderCompId: TCLIENT1

port: 9001

customerIds: [C1]

gatewayName: OE1

gatewayType: FIX_ORDER_ENTRY

password: EV8aa147993e8c9c4424b696c698415dcf // hashed

}

{

senderCompId: TCLIENT2

port: 9002

customerIds: [C2, C3]

gatewayName: OE1

gatewayType: FIX_ORDER_ENTRY

password: EV8aa147993e8c9c4424b696c698415dcf

}

]

MD1: [

{

senderCompId: DCLIENT1

port: 7001

customerIds: [C1]

gatewayName: MD1

gatewayType: FIX_MARKET_DATA

password: EV8aa147993e8c9c4424b696c698415dcf

}

]

MD2: [

{

senderCompId: DCLIENT4

port: 7004

customerIds: [C4]

gatewayName: MD2

gatewayType: FIX_MARKET_DATA

password: EV8aa147993e8c9c4424b696c698415dcf

}

]

}

}

For more information, see the FIX Gateway Administrator’s Guide.

Custom Authentication

FIX Gateway supports a custom asynchronous authentication service.

authentication {

factory = "deltix.ember.connector.zmq.ZMQAuthenticatorFactory"

settings {

...

}

}

Source IP whitelisting

Typically source IP whitelisting is delegated to Load Balancer layer that is upstream of FIX Gateway. In addition FIX Gateway has own mechanism of checking source client IP for exact match with known public IP of each FIX session originator. Use remoteAddress field to specify source IP filter. You can specify CIDR mask or specific IP address. When remoteAddress field is not specified there is no restriction on source IP.

{

senderCompId: TCLIENT1

port: 9001

customerIds: [C1]

gatewayName: OE1

gatewayType: FIX_ORDER_ENTRY

password: EV8aa147993e8c9c4424b696c698415dcf // hashed

remoteAddress: "10.23.102.64/28" # source IP white listing CIDR. No restrictions if unspecified

}

For your convenience FIX Gateway logs remoteAddress IP of each incoming session. Look for messages like:

[MDG ID] Accepted connection from 10.23.102.66 to port 9001

REST/WebSocket API Gateway

The Ember can provide a REST/WebSocket API interface to Order Entry, Risk, and Positions. When enabled, this API gateway service runs inside the Ember Monitor. More information about this API can be found here.

The REST/WS Gateway uses API keys to authenticate users.

The following configuration stanza can be used to enable the REST/WS API gateway and define a list of supported API keys and secrets:

monitor {

gateway {

accounts = [

{

"sourceId" = "CLIENT1",

"apiKey" = "w6AcfksrG7GiEFoN",

"apiSecret" = "gZ0kkI9p8bHHDaBjO3Cyij87SrToYPA3" // consider storing secrets in Vault

},

… more API keys

]

}

}

Each API account is mapped to Ember’s Source ID (the normal way to identify the source of API requests).

The gateway may support multiple API client accounts. Each REST/WS client is mapped to a specific order source. The order source is a fundamental concept in Ember and is described in the Data Model document. You can open access to the REST/WS API to multiple clients (sources).

By default, Ember ensures that each client only submits orders on behind of that client's source ID. When a client is listing active orders or fetching the current position, the API gateway ensures that the client can only see orders and positions that were sourced by this client.

As of today, the Ember Monitor does not contain a UI to generate API keys and secrets. We recommend using the widely available long password generators to create these keys and secrets. Here are a few resources:

- https://www.lastpass.com/features/password-generator

- https://my.norton.com/extspa/passwordmanager?path=pwd-gen

API key is used just for identification. API secret is used for cryptographic signature/verification. There are no restrictions on API secret content or length. Secrets longer than 128 bytes doesn’t buy you extra strength (it gets hashed down first).

We recommend storing API key secrets in a Hashicorp Vault. (See the External Secret as a Service section above).

At minimum, store all this information hashed using the mangle tool described here.

Orders submitted using REST API are validated as they pass through gateway and can be immediately rejected if they, for instance,

include custom attributes with tags outside of the default range 6000-8999. If necessary, this accepted range can be customized

using customAttributesSet parameter that can include a range or individual tags separated by commas and can be set like this:

monitor {

gateway {

customAttributesSet = "6000-9999,80,1024"

}

}

Data Warehouse

The Ember can stream orders and trading messages to the database of the client choosing. Typically, this is used for data archiving and settlement purposes.

There are separate documents that describe how to set this up for each specific database:

- Data warehousing into AWS S3

- Data warehousing into ClickHouse

- Data warehousing into RedShift

- Data warehousing into TimeBase

- Data warehousing into Kafka

- Data warehousing into SQL Server

Before we describe these options in detail, here are a couple of complete examples of configured warehouse services.

S3:

warehouse {

s3 { # unit id, you use it when you run the app, it might be any

live = true # false for batch mode (true is default)

commitPeriod = 1h # repeatedly commits not full batch at the specified period (5s is default)

messages = [

${template.warehouse.s3.messages} { # loader which loads order messages

filter.settings.inclusion = null # EVENTS or TRADES, null means no filter

loader.settings { # default # description

bucket = deltix-ember-algotest # AWS S3 bucket name

region = us-east-2 # - # AWS S3 bucket region

accessKey = EV***************************************

secretKey = EV***************************************

maxBatchSize = 200000

minBatchSize = 0

}

}

]

orders = [

${template.warehouse.s3.orders} { # loader which loads closed orders

loader.settings { # default # description

bucket = deltix-ember-algotest-s3 # AWS S3 bucket name

region = us-east-2 # - # AWS S3 bucket region

accessKey = EV***************************************

secretKey = EV***************************************

maxBatchSize = 200000

minBatchSize = 0

}

}

]

}

}TimeBase:

warehouse {

timebase { # unit id, you use it when you run the app, it might be any

live = true

storeCustomAttributes = true

messages = [

${template.warehouse.timebase.messages} { # loader which loads order messages

filter.settings.inclusion = null # EVENTS or TRADES, null means all

loader.settings { # default # description

url = ${timebase.settings.url} # ${timebase.settings.url} # server url

stream = "messages" # messages # stream name

clear = false # false # clear stream on startup

purgePeriod = 1d # 0 # purge stream repeatedly at period

purgeWindow = 2d # 0 # purge stream up to (now - purge window)

}

}

]

orders = [

${template.warehouse.timebase.orders} { # loader which loads closed orders

loader.settings { # default # description

url = ${timebase.settings.url} # ${timebase.settings.url} # server url

stream = "orders" # orders # stream name

clear = false # false # clear stream on startup

purgePeriod = 7d # 0 # purge stream repeatedly at period

purgeWindow = 14d # 0 # purge stream up to (now - purge window)

}

}

]

}

}

Live vs. Batch mode

You can run the Data Warehouse service in two modes:

- Live mode – The service exports data in near real-time. The service waits for new data to appear.

- Batch mode – The service exports all existing data and exits. Batch mode is suitable for periodic cron-based runs.

For example:

warehouse {

redshift {

live: true

…

Filters

You can define a filter for messages that are stored in a data warehouse.

When provided, the “include” filter only allows requests with given source IDs or events with given destination IDs. When this filter is omitted, all messages are stored.

When provided, the “exclude” filter explicitly prevents requests with given source IDs or events with given destination IDs from the data warehouse.

Example:

warehouse {

timebase {

messages = [

${template.warehouse.timebase.messages} {

filter {

settings {

source {

includes = ["CWUI", “TRADER1”]

}

}

}

}

]

}

}

Data Warehouse performance

There are several parameters that can be used to rune data warehouse performance and responsiveness:

commitPeriod – Defines how often to force a commit of an accumulated batch, even if it is a less than optimal batch size (e.g., 5 seconds). Only applicable for Live mode. NOTE: update fence may interfere with this parameter.

readBatchLimit – How much data is read from Ember journal at once (e.g., 64 K).

loader.settings.batchLimit – Defines the optimal batch size for write operations. Different databases may have different ideal batch sizes for an optimal injection rate. Default is 65536. NOTE: Applicable only for data warehouses that use a JDBC driver (RDS SQL, Redshift, ClickHouse). This parameter simply forces our code to call

Statement.commitafter everybatchCommitnumber of inserts.loader.settings.minBatchSize - Controls batching for the S3 data warehouse pipeline. When streaming data into Amazon S3, we format each message as a JSON object and gzip batches of these messages into files on the S3 bucket. From this angle, it would be very inefficient and cost prohibitive to store small groups of data based on the

commitPeriodparameter defined above. The parameterminBatchSizeacts as a guard for time-based commits.

warehouse {

s3 {

live = true

commitPeriod = 15m

readBatchLimit: 64K

messages = [

${template.warehouse.s3.messages} {

loader.settings {

…

minBatchSize = 100

}

}

]

}

}

Data Warehouse restart strategy

Destination warehouse may not be always available. Each service that streams data into a warehouse can be configured to handle this differently:

warehouse {

sample-unit { # for example: clickhouse

restartStrategy {

policy = ON_FAILURE # ALWAYS, NEVER

delay {

min = 15s

max = 5m

}

}

}

}

As you can see, the gateway provides an ability for several clients (represented by order sources) to send trading requests using pre-configured API keys.

Please note that here, as well as in all other places, the source ID should use an ALPHANUMERIC(10) data type (basically limited to capital ASCII letters, digits, and some punctuation characters).

The default strategy is retrying with exponential back-off delay to restart streaming data in case of errors (e.g., disconnect). When you want to handle this outside of the application, you can set policy = NEVER and the application will exit with an error code. The policies ALWAYS and ON_FAILURE are the same when the application is configured to stream data in live mode, which is the default, and the application waits for new data but does not terminate when the end is reached.

Updatable active orders in SQL data warehouses

Normally Ember Data Warehouses store completed orders only (when each order reaches final state, wheather it is COMPLETELY_FILLED, CANCELLED, or REJECTED).